Semantic Image Segmentation on MNISTDD-RGB

A project where I customize U-Net for semantic segmentation on double digit MNIST RGB.

Summary

I customize U-Net on a MNIST Double Digits RGB (MNISTDD-RGB) for a train-valid-test split dataset which was provided from CMPUT 328.

Dataset consists of:

- input: numpy array of numpy arrays which each represent pixels in the image, shape: number of samples, 12288 (flattened 64x64x3 images)

- output:

- segementations: numpy array of numpy arrays which each represents the labels in the corresponding image, shape: number of samples, 4096 (flattened 64x64)

I customized a U-Net model for image segmentation. I achieve an accuracy of 87%.

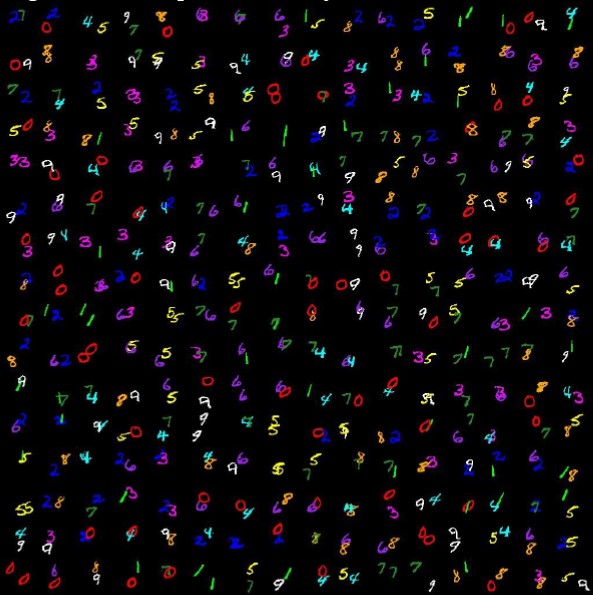

MNIST Double Digits RGB Dataset Sample.

I apply semantic segmentation where the background is black and each is colored.

References

(2017).Pytorch-Unet, from https://github.com/milesial/Pytorch-UNet

I used CMPUT 328’s code templates from:

Assignment 8: Image Segmentation/predict.py from A8_submission and Image Segmentation/predict.py from A8_main